Data Analysis and Information SecuritY (DAISY) Lab

Contact Us

Go to Prof. Yingying Chen's Home Page

The DAISY LAB is led by Prof. Yingying (Jennifer) Chen. You can always stop by her office at CoRE Building 516 or or EE Building 128 to have an inspired research discussion!

Current Research Areas

As the influence of information technologies and mobile devices persists and integrates further into the fabric of society, there is a notable surge in the volume of sensing data. Consequently, the demand for efficient data analysis models escalates. Moreover, the information infrastructure is increasingly susceptible to malicious attacks. Given the substantial volume of data transmitted from mobile devices across networks, eventually reaching the Internet, a primary inquiry pertains to the extent to which information can be "sensed" and the delineation of "fair and responsible use" thereof. The focus of the Data Analysis and Information Security (DAISY) Laboratory encompasses research and educational endeavors in Machine Learning and Large Language Model (LLM) in Mobile Computing/Sensing, Internet of Things (IoT), Security in AI/ML Systems, Smart Healthcare, and On-Device Artificial Intelligence (AI).

{{p.title}}

{{p.desc}}

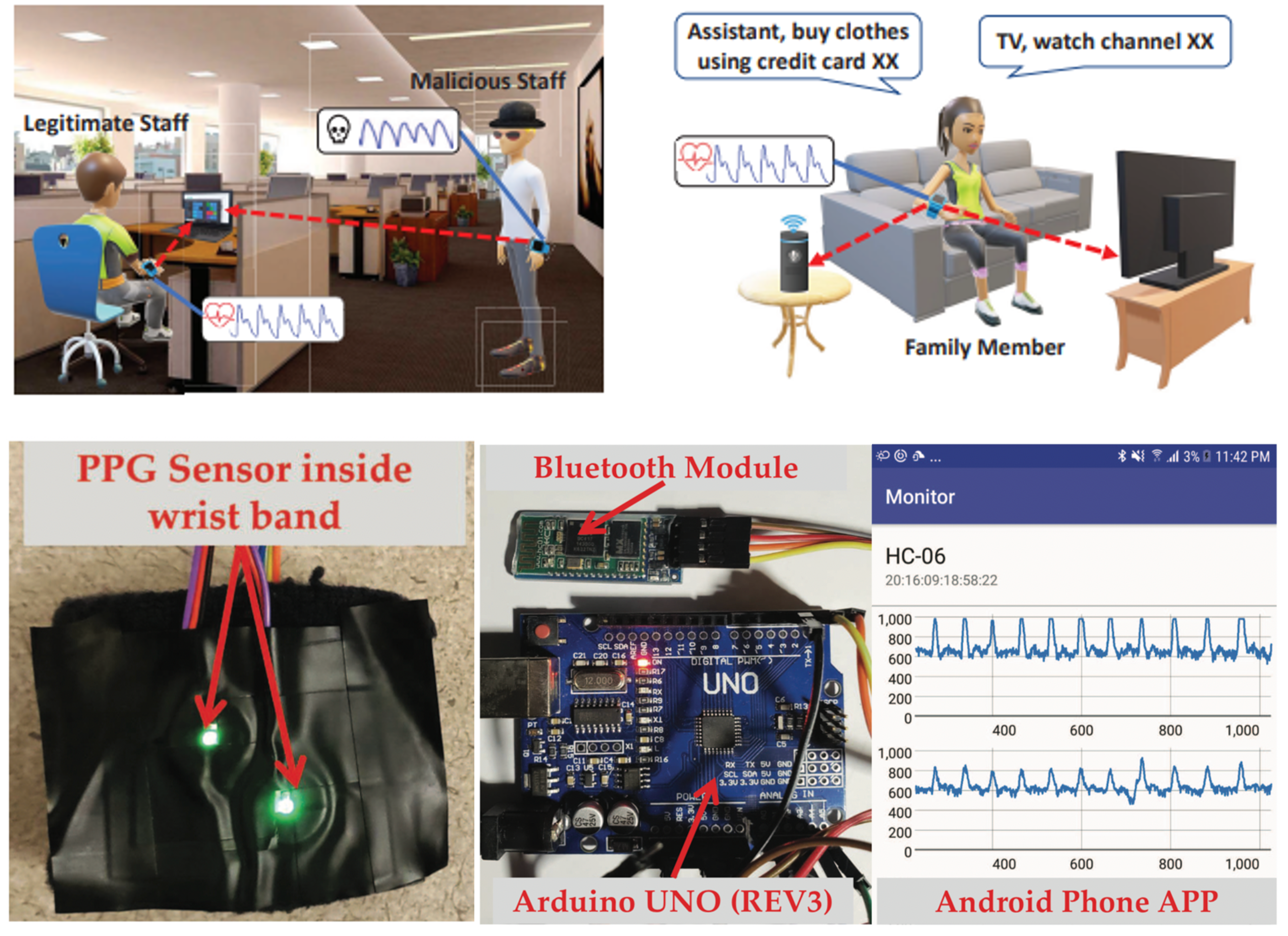

TrueHeart: Continuous Authentication on Wrist-worn Wearables Using PPG-based Biometrics

We design low-cost user authentication system (TrueHeart) that leverages intrinsic cardiac biometrics extracted with photoplethysmography (PPG) sensors to verify user identity. Our system only utilizes readily available PPG sensors on wrist-worn wearable devices and does not require any additional user efforts.

PPG-based Finger-level Gesture Recognition Leveraging Wearables

We also demonstrate that it is feasible to leverage the PPG sensors in wrist-worn wearable devices to enable finger-level gesture recognition, which could facilitate many emerging human-computer interactions (e.g., sign-language interpretation and virtual reality). We introduce the first PPG-based gesture recognition system that can differentiate fine-grained hand gestures at finger level using commodity wearable devices.

{{p.title}}

{{p.desc}}